Gemini LLM Chatbot | Gemini Pro Model

The project is a Streamlit-based chatbot application using Google's Gemini LLM (Generative AI) model. Users can input a question, and the chatbot responds in real-time by generating answers from the Gemini model. The app maintains a chat history, which updates with both the user's queries and the AI's responses, allowing for an interactive conversation. It integrates the Google Gemini API to handle AI-powered conversation and displays the dialogue using the Streamlit interface.

Services:

- LLM

- Genai

Client:

Personal

Project link:

https://github.com/MorshedulHoque/Chatbot---Gemini-ProDuration:

N/A

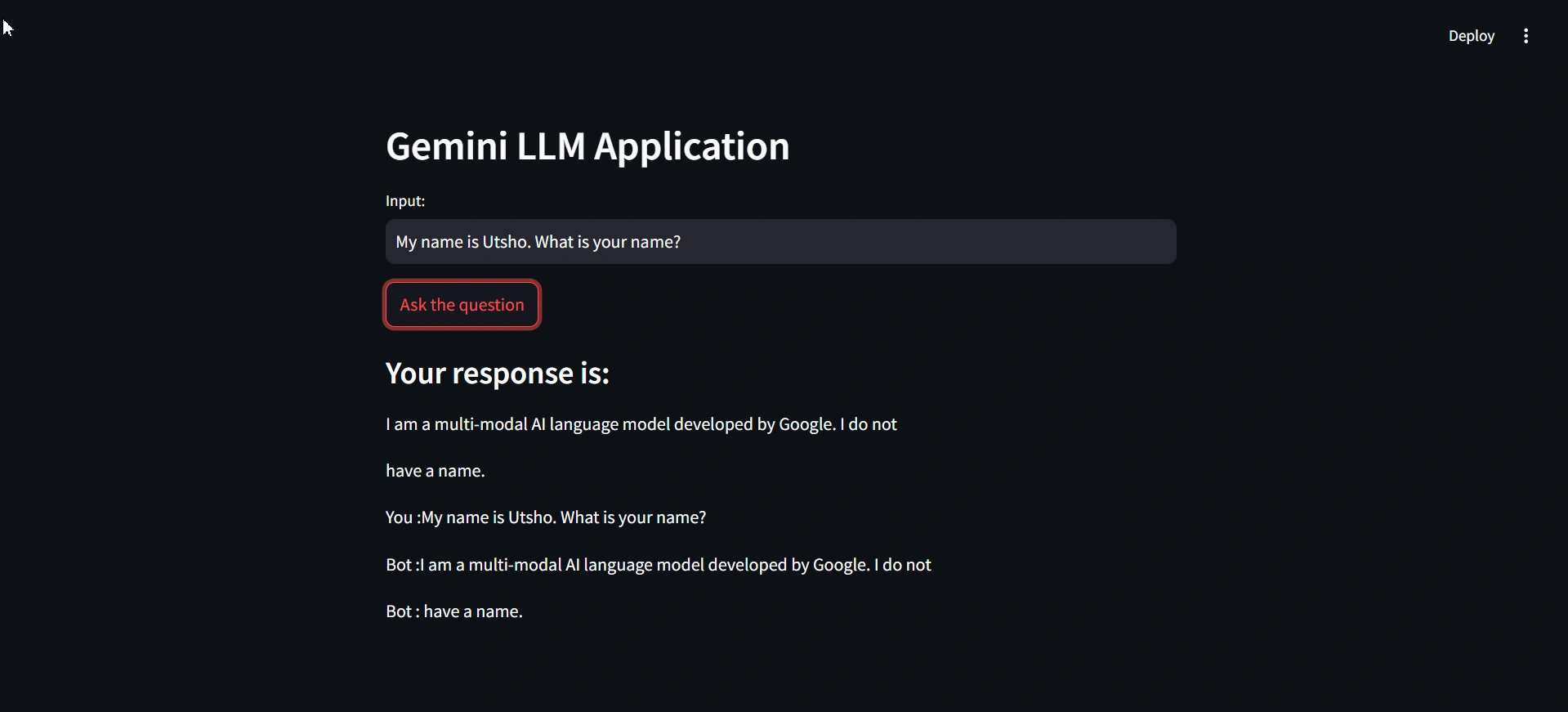

This chatbot application is built using the Gemini LLM provided by Google. It integrates environmental variables and a Streamlit interface to interact with users. The chatbot not only responds to user queries in real-time but also maintains a history of the conversation, allowing for context-aware interactions in a session.

Features

- Environment Variables: Manages sensitive data using Python's dotenv package.

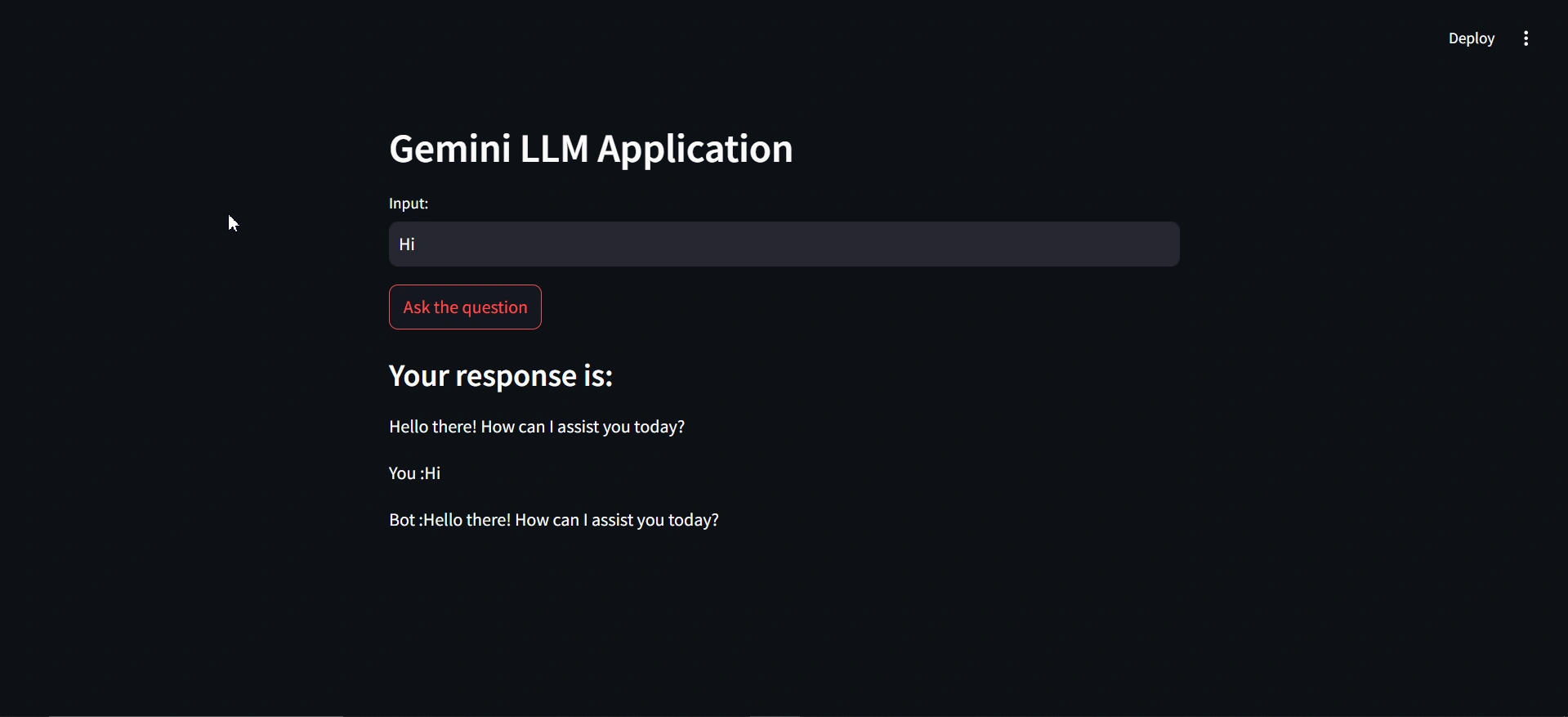

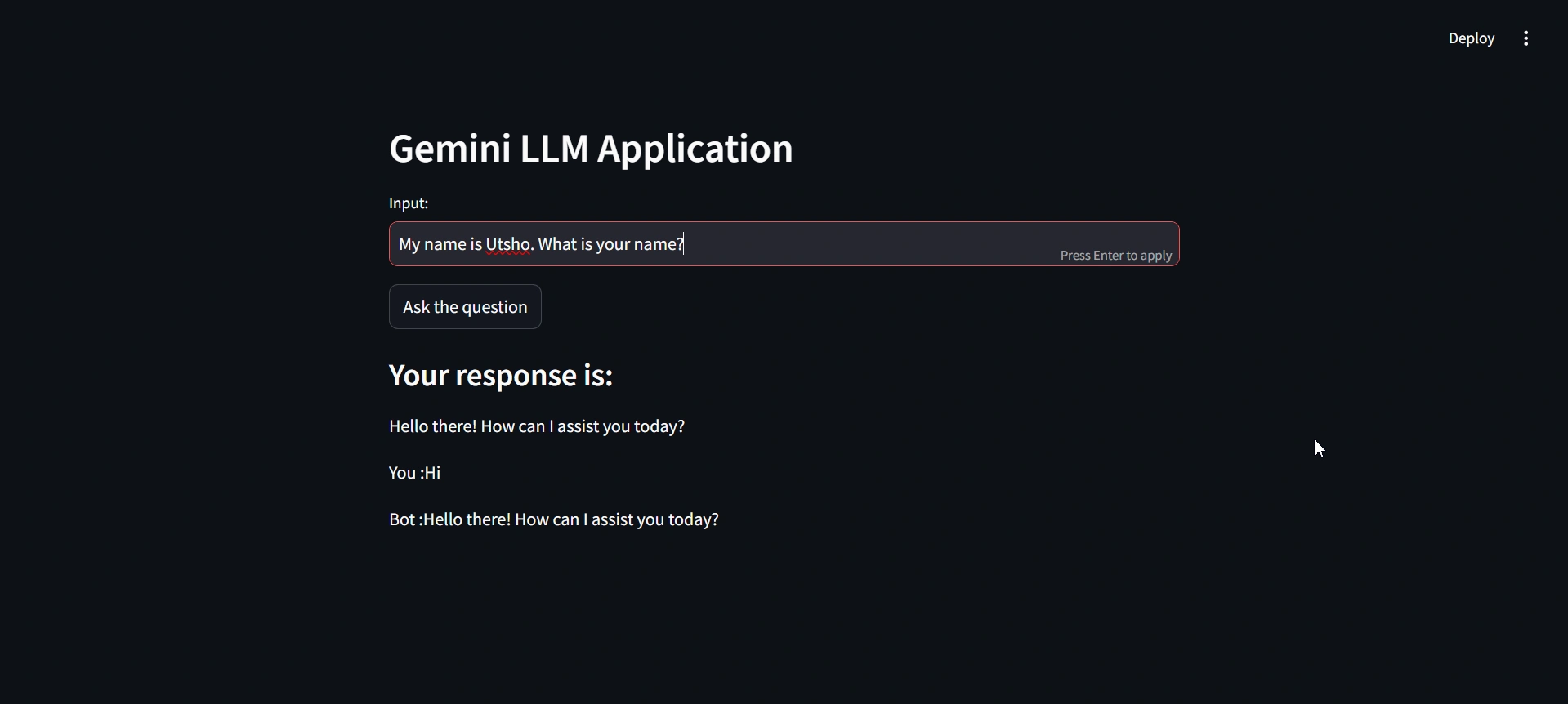

- Streamlit Interface: Offers a user-friendly, web-based interface for real-time interactions.

- Chat History: Maintains a session-based history of conversations for context retention.

- Gemini LLM Integration: Leverages Google's Gemini LLM for dynamic and intelligent responses.

Prerequisites

- Python 3.8+

- Streamlit

- dotenv Python package

- os

- genai

Usage

- Enter your query in the text input box and submit.

- View both your question and the Gemini's response displayed on the screen, with previous interactions saved in session state for context.